Since the inception of Bitcoin, blockchain technology has completely redesigned the way we perceive value and conceptualise money as a whole. Capturing the imagination of many and sparking high interest levels across different industries, blockchain has subsequently turned into one of the most exciting technological advancements of the 21st century as well as an incredibly sought-after infrastructure.

This sentiment is primarily fuelled by the innate desire to construct and partake in a revolution that is just as big as the creation of the internet was back in the 80s and 90s. While the internet initiated the realm of online intercommunication, blockchain is pioneering new forms of wealth creation through data and digital asset networks.

These networks are their own rigorously decentralised, technical ecosystem designed to provide ledger and smart contract services to applications, also known as dApps. Ethereum, the second most valuable cryptocurrency, was conceived to make it easier to build these applications and ultimately give users more control over their finances and online data.

The Second Largest Cryptocurrency Facilitates The Process Of Building Decentralised Applications On-Chain - Image via CoinSwitch

The Second Largest Cryptocurrency Facilitates The Process Of Building Decentralised Applications On-Chain - Image via CoinSwitch The idea with Ethereum is to develop somewhat of a ‘world computer’ through which dApps can run, grow and expand their use cases and, eventually, come to challenge major businesses and platforms such as Amazon or Twitter, for instance. Thus, Ethereum would serve as a decentralised ‘world computer’, open to all and that cannot be tampered with nor shut down.

If this is to be achieved, Ethereum will need to be able to store and preserve huge amounts of data within its system, a capability that currently it does not possess. However, Ethereum could solve its scalability problem by implementing a method called sharding, an upgrade that indeed is set to go live with Ethereum 2.0.

What Is Sharding?

In computer science, sharding is a technique used for scaling applications so that they can support more data. The process consists of breaking up large tables of data into smaller chunks, called shards, that are spread across multiple servers. Each shard comes with its own data, making it distinctive and independent when compared to other shards.

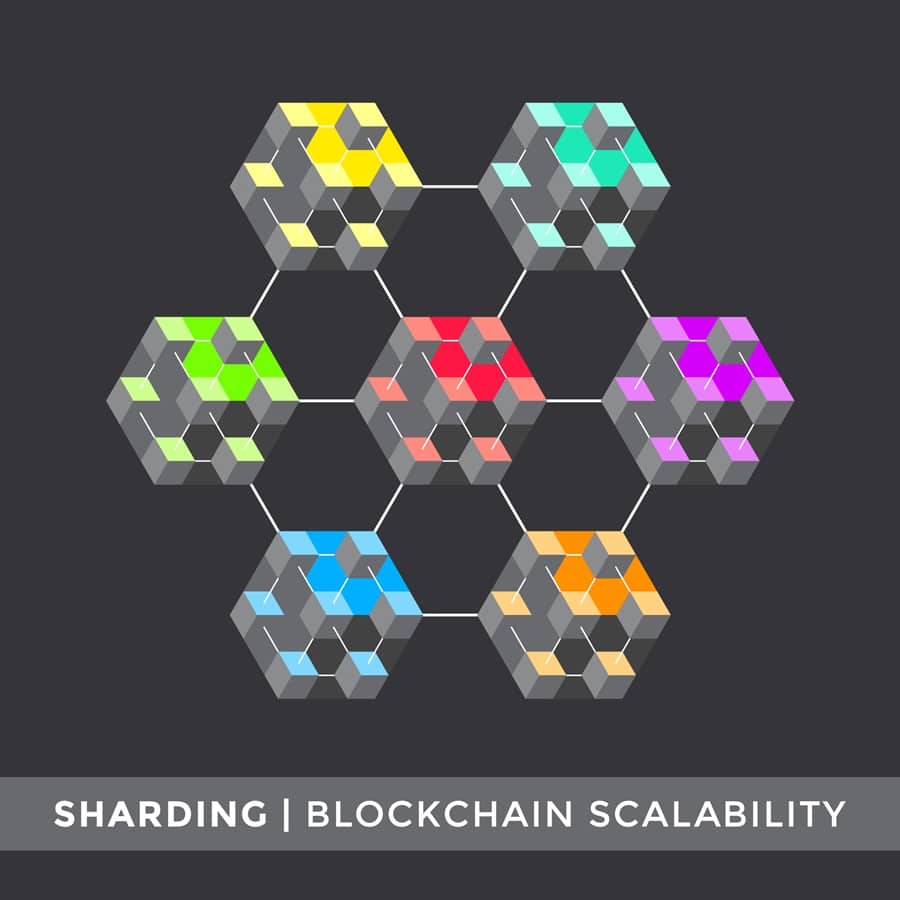

Sharding allows blockchains to scale more effectively.

Sharding allows blockchains to scale more effectively. Sharding is of particular benefit to blockchain networks as it allows them to reduce latency and data overload by splitting the network into smaller partitions and enables them to process more transactions per second. Specifically, sharding is necessary if a dataset is too large to be stored in a single database and, given the numerous projects and developers building on its network, this is indeed the case with Ethereum.

In fact, according to data analytics, there are over 3,000 decentralised applications (dApps) running on the Ethereum blockchain, so scalability through sharding is an absolute requirement for Ethereum to maintain its leading status in the ecosystem and ensure the overall efficiency of its network.

How It Works

In order to fully understand how sharding works in blockchain, it is of vital importance to review the functions carried out by nodes and understand how data is stored and processed.

Nodes are a critical component of blockchain infrastructures as in fact, without them, a blockchain’s data would not be accessible. All nodes are connected to one another and are constantly interchanging the latest blockchain data so that all nodes can stay up to date. Basically, nodes constitute the foundational layer of blockchain as they enable it to store, preserve and spread data across its infrastructure.

Nodes are responsible for the correctness and reliability of storing the entered data in the distributed ledger - Image via CoinMonks

Nodes are responsible for the correctness and reliability of storing the entered data in the distributed ledger - Image via CoinMonks In decentralised networks, each node must be able to store critical information such as transaction history and account balances. By spreading out data and transactions across multiple nodes, blockchain can ensure its own security, however, this model is not the most effective in terms of scalability. While its distributed ledger system provides blockchain with decentralisation and security features, a network that needs to process large amounts of transactions and store high quantities of data may become overwhelmed, clogged up and experience latency or slowness.

Ethereum, for example, can do between 10 and 20 transactions per second but this is actually not that performant for a blockchain of its size, and the reason behind its slow speed is the Proof of Work (PoW) consensus protocol inherent in its framework. Therefore, because of this, the Ethereum blockchain is in dire need of scalability.

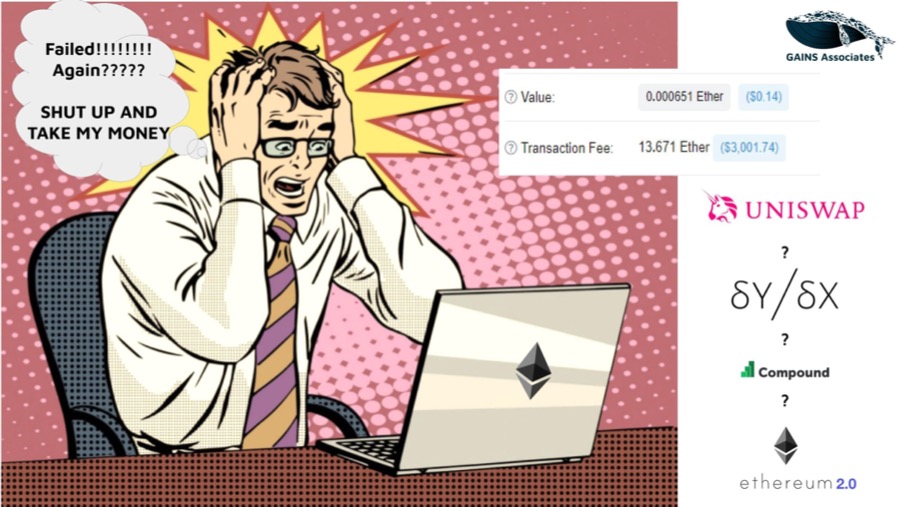

When The Network Is Congested Ethereum Gas Fees Can Be Extraordinarily High - Image via BitcoinTalk

When The Network Is Congested Ethereum Gas Fees Can Be Extraordinarily High - Image via BitcoinTalk Through sharding, however, a blockchain network can spread out its workload horizontally so that every node does not need to handle or process all of its transactions, allowing for a more compartmentalised and efficient design.

Horizontal Partitioning

Sharding is achieved through the horizontal partitioning of a database or network into different rows called shards. This horizontal architecture creates a more dynamic ecosystem as it allows shards to perform specialised actions based on their characteristics. For instance, a shard might be responsible for storing the transaction history and state of a particular address or will be able to cooperate with other shards to process transactions for a said digital asset.

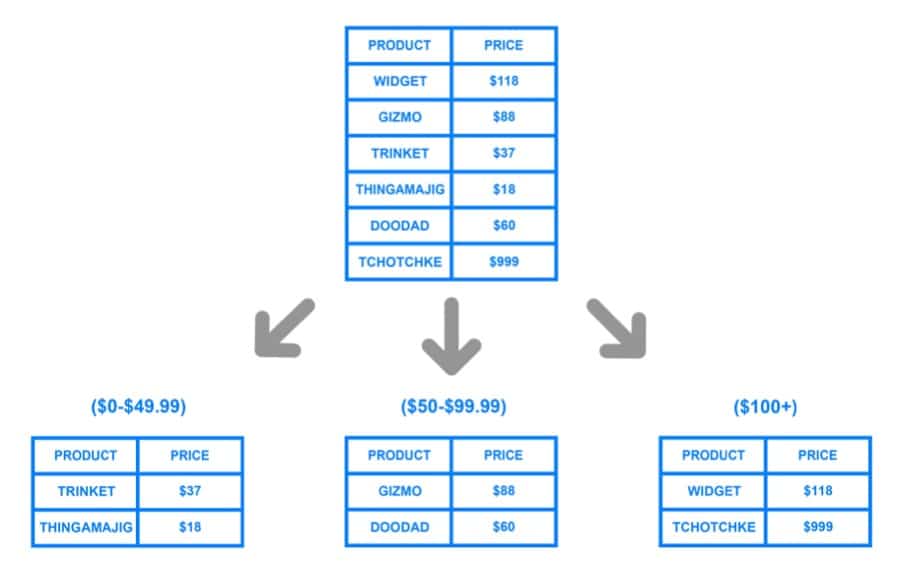

To better understand how horizontal partitioning works, the model below will illustrate this.

Data Is Split Horizontally Into Smaller, More Efficient Components - Image via DigitalOcean

Data Is Split Horizontally Into Smaller, More Efficient Components - Image via DigitalOcean The model shows a large database consisting of 6 rows. The pre-sharded table is then broken down into 3 smaller, horizontal shards to make processing the large table of data more manageable. Horizontal partitioning only converts the table into a smaller, more efficient version of itself while maintaining its original features. The same concept can also be applied to blockchain infrastructures, whereby the chain’s state can be fragmented into smaller, more manageable chunks, also known as shards.

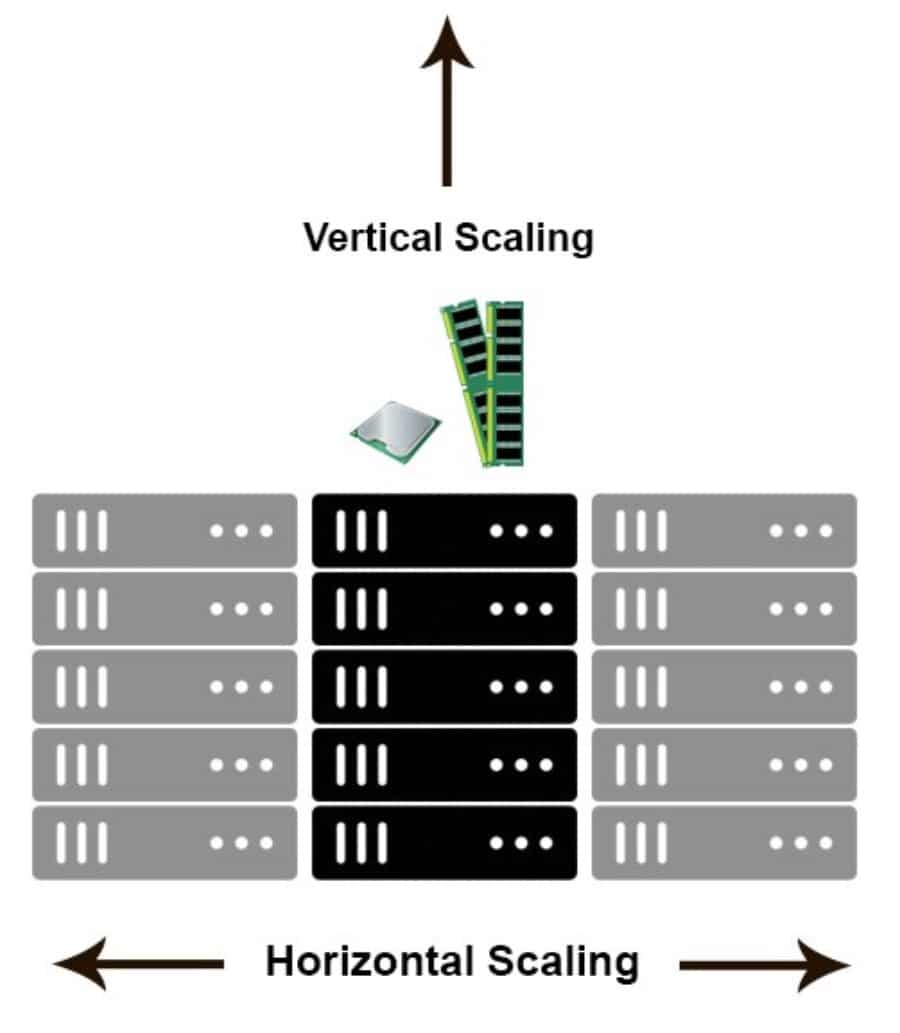

Horizontal vs Vertical Scaling

When dealing with the subject of scalability, blockchain infrastructures have a few options: Layer-2 solutions, vertical scaling and horizontal partitioning.

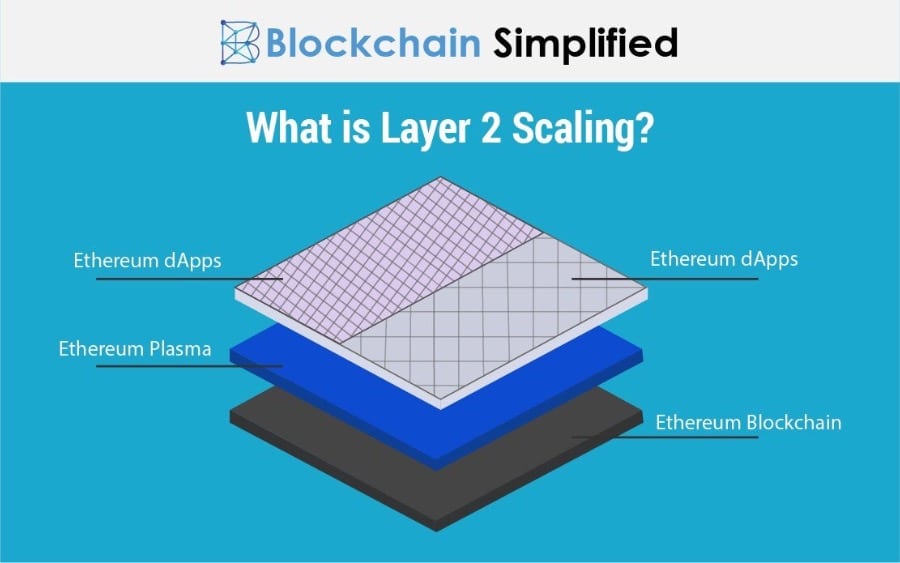

Layer-2s are off-chain scalability solutions built on top of the blockchain. The idea with Layer-2s is to essentially by-pass the underlying base layer and place an additional architecture on top of it. This additional layer deals with complex computations and looks to mitigate the various bottlenecks in the architecture of the base layer. Plasma and Raiden are the most common examples of Layer-2 scalability and perhaps the most notable project leveraging Layer-2 is Polygon, formerly Matic.

Layer-2 Scaling Solutions Operate Off-Chain And Are Built On Top Of A Blockchain's Base Layer - Imagine via BlockchainSimplified

Layer-2 Scaling Solutions Operate Off-Chain And Are Built On Top Of A Blockchain's Base Layer - Imagine via BlockchainSimplified Then comes vertical scaling. Vertical scaling entails the expansion of a network by adding more power and memory to a system’s core processing unit and is done by improving the efficiency of each individual transaction. To do this, vertical scaling basically inserts more processing power into an existing virtual machine to bolster its processing capacity.

Vertical scaling solutions are rather limited in their effectiveness but are way easier to implement as opposed to horizontal scales. In fact, if for example there is an issue with a virtual machine’s local memory not being sufficient enough to process an incoming load of transactions, a vertical scaling solution could potentially remedy this. In this scenario, a vertical scale would add more memory to the virtual machine’s infrastructure, consequently reducing its processing overload and boosting its transactional throughput.

Vertical Scaling Adds More Power And Memory To An Existing Machine - Image via StackOverflow

Vertical Scaling Adds More Power And Memory To An Existing Machine - Image via StackOverflow However, if an incoming transaction load exceeds the virtual machine’s hardware capacity, as in it cannot physically process it, a horizontal scaling solution is required.

As discussed above, horizontal scaling or sharding helps improve the overall throughput of blockchain infrastructures by adding more clusters or individual virtual machines to the existing base layer. While it is an ultimately efficient system for scaling, sharding indeed comes with its complexities as it takes longer to be implemented and for it to be fully operational.

Moreover, when it comes to actively implementing scalability solutions in blockchain infrastructures, there are a few questions that need to be addressed from a purely technical and fundamental standpoint.

Sharding For Greater Decentralisation

There is most definitely an argument to be made with regards to the correlation between sharding and decentralisation in blockchain. In fact, when introducing the concept of scalability to the context of blockchain technology, it should be noted that because these systems already operate as distributed networks, it is inherently difficult to increase their overall throughput by adding hardware entities to them, such as nodes, miners or validators.

This is furthermore accentuated by the fact that blockchain developers strive to preserve the overall immutability of the base layer chain and are determined not to tamper with its underlying architecture.

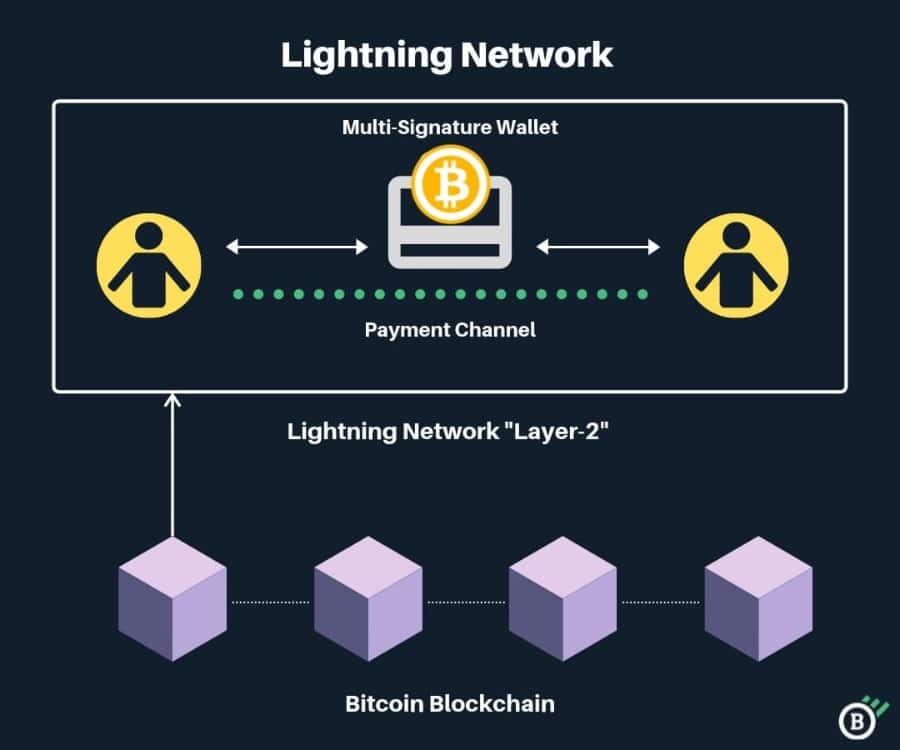

This, in turn, produces some advantages for scaling solutions as it allows them to leverage the existing security and reliability of the base layer chain to extend its potential transactional throughput, without touching its foundational infrastructure. The Lightning Network is a very good example of this, as it uses its technology to leverage the security of Bitcoin in order to increase the system’s overall transaction capacity.

The Lightning Network Uses The Reliability Of The Bitcoin Network To Boost Transaction Throughput - Image via Blockonomics

The Lightning Network Uses The Reliability Of The Bitcoin Network To Boost Transaction Throughput - Image via Blockonomics In addition, because sharding splits large chunks of data into smaller, more efficient, horizontal partitions this indeed allows for the creation of a more decentralised ecosystem as a whole. In fact, if the entire data structure of a blockchain network resided in one supernode and only a few individuals could run and access it, this would firstly make it easier for attackers to manipulate and exploit said structure and, secondly, it would take away from the trustless, decentralised aspirations native to blockchain ecosystems.

Thus, scalability and sharding in particular can be viewed as integral components of the overall development of blockchain networks as well as ultimate catalysts for blockchain’s decentralised ‘raison d'être’.

Sharding With ETH 2.0

According to block explorer Etherscan, Ethereum full nodes already take up at least 5 terabytes of space, which is 10 times more than what the average computer can hold. Furthermore, Ethereum’s nodes are only going to get bigger and harder to run as the platform develops and its user base grows over time.

Without A Sustainable Scaling Solution, Blockchain Networks Will Struggle To Run Large Quantities Of Nodes - Image via EtherScan

Without A Sustainable Scaling Solution, Blockchain Networks Will Struggle To Run Large Quantities Of Nodes - Image via EtherScan It is clear therefore that Ethereum requires imminent scalability, and sharding is in fact the solution for this. So, let us now discuss how sharding with Ethereum 2.0 will work.

Divide Nodes And Conquer

Along with Casper and Ethereum WebAssembly (ewasm), sharding is one of the primary features of the much anticipated Ethereum 2.0 upgrade. Currently, on the Ethereum network, each node must verify each transaction, a feature that inherently ensures the liveliness of its network. This is so that even if 80% of the Ethereum nodes went down simultaneously, the network would still operate.

This current model does not necessarily make Ethereum slower but it is indeed problematic as it doesn’t fully optimise ETH’s network resources. For instance, let’s say there are three separate nodes on the Ethereum network verifying a transaction and these nodes are defined as node X, node Y and node Z. At present, in order to verify a transaction, defined as data T, each node will have to verify the whole dataset of T for it to be confirmed.

While this ensures the overall security of the network, it creates a bottleneck through which every transaction must pass. In fact, the network is forced to wait for every node to verify every transaction which, of course, is not the most ideal or efficient scenario. With Ethereum 2.0 and its sharding proposal, however, the dataset of T would be broken down into T1, T2 and T3, for instance, and nodes X, Y and Z would each need to only process one of the smaller data shards in order to verify the entirety of T’s dataset.

‘We want to be able to process 10,000+ transactions per second without either forcing every node to be a supercomputer or forcing every node to store a terabyte of state data, and this requires a comprehensive solution where the workloads of state storage, transaction processing and even transaction downloading and re-broadcasting are spread out across nodes.’ ShardingFAQs - Ethereum Wiki

By breaking down data into smaller, individual subsets Ethereum can achieve greater transactional throughput and create a faster, more sustainable environment for it to pursue its future goals and continue growing as an ecosystem.

Sharding Mechanics

Ethereum 2.0 will look to maximise the efficiency of its network by completing a base layer transition from Proof of Work (PoW) to Proof of Stake (PoS). The Proof of Work (PoW) consensus algorithm is based on the concept of a miner to keep the network safe and synchronised, and requires large amounts of computational power to operate. On the other hand, Proof of Stake replaces the consumption of energy with a financial commitment.

In this instance, ETH 2.0’s Proof of Stake protocol, called Casper, will no longer require miners but will implement validators who, by placing at least 32 ETH in a staking pool, will be able to validate transactions and create new blocks. Casper will be delivered via the Beacon Chain, which will be the system chain of Ethereum 2.0, and will allow shards to communicate with one another.

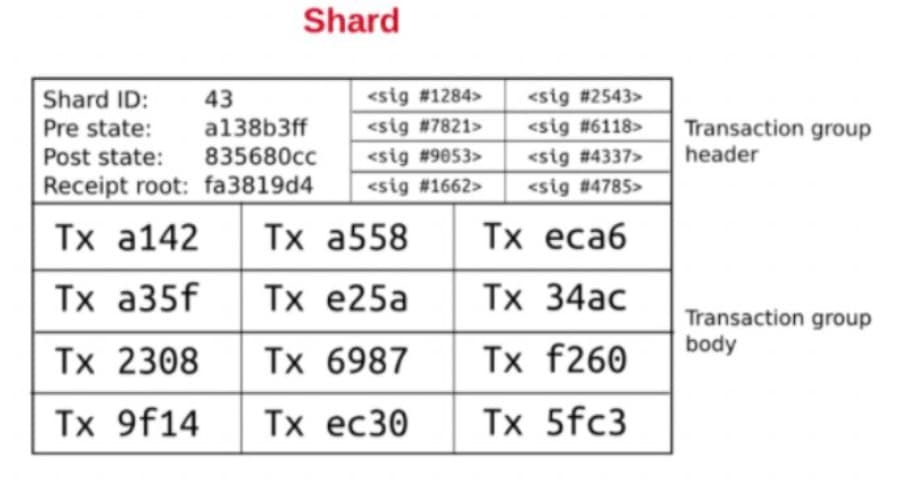

Of course, this constitutes quite the infrastructural step-up and users should therefore expect to deal with a new, more optimised form of Ethereum overall. To begin with, ETH 2.0 will make user accounts specific to a certain shard and will compartmentalise transactions into ‘transaction packages’, assigning each package to a particular shard. These packages will be further subdivided into transaction group headers and bodies, with each component defining specific characteristics of the shard.

The Shard's Transaction Group Header And Body - Image via BlockStreet Medium

The Shard's Transaction Group Header And Body - Image via BlockStreet Medium As illustrated in the image above, each transaction group header has a distinct left and right part. The left part of the header presents the following components:

- Shard ID: To identify the shard that the transaction group belongs to.

- Pre-State Root: The state of the root of that particular shard before the transaction group is placed inside it.

- Post-State Root: The state of shard’s root after the transaction group is placed inside it.

- Receipt Root: Confirmation that the transaction group has entered into the shard’s root.

The right part of the header, on the other hand, shows a group of randomly selected validators who verify transactions inside the shard itself. These transaction packages, furthermore, need to undergo a double verification process in order to be annexed to the main chain. The first verification process involves validators who are randomly assigned to a shard to vote on the validity of each transaction package. If validators vote yes, a separate committee on the Beacon Chain must verify this vote using a sharding manager smart contract. If the second vote is also positive, the transaction package will be appended to the main chain and become part of the public ledger, establishing an immutable cross-link to the transaction group on that shard.

Cross-Shard Communication

ETH 2.0’s infrastructure will enable shards to effectively intercommunicate with the goal of producing a truly interoperable and mutually beneficial ecosystem. Vitalik Buterin, the visionary and founder of Ethereum, best described the concept of cross-shard communication at DevCon 2018 in Prague by stating:

Imagine that Ethereum has been split into thousands of islands. Each island can do its own thing. Each of the island has its own unique features and everyone belonging on that island i.e. the accounts, can interact with each other AND they can freely indulge in all its features. If they want to contact other islands, they will have to use some sort of protocol. - Vitalik Buterin Devcon 2018 - LinkedIn

Keeping Buterin’s shard-island analogy in mind, it is clear that if these shards want to successfully communicate with one another they will require a specific cross-shard protocol. ETH 2.0’s shard intercommunication protocol of choice is the so called ‘receipt paradigm’. As previously displayed in the image above, the Receipt Root is a component of the transaction group’s header and is used to confirm that a transaction group has entered into the shard’s root in a Merkle tree.

Every single transaction in the group generates a receipt in the specific shard it belongs to. The Beacon Chain, the system chain of Ethereum 2.0, will then utilise its distributed shared memory to store all these transactions receipts within it, hence the term ‘receipt paradigm’. This is so that other shards can view and interact with the receipts inside the Beacon Chain but because of the immutable nature of the blockchain, they will not be able to tamper with the transaction receipts themselves.

Ethereum 2.0 will make sharding a reality, and Ethereum a new blockchain.

Ethereum 2.0 will make sharding a reality, and Ethereum a new blockchain. This is an important feature because it will enable shards to know exactly when it is appropriate to communicate with each other and only do so when needed. In essence, this ETH 2.0-specific design allows shards to verify and benefit from each other’s activity while maintaining the finality and purpose of each individual shard.

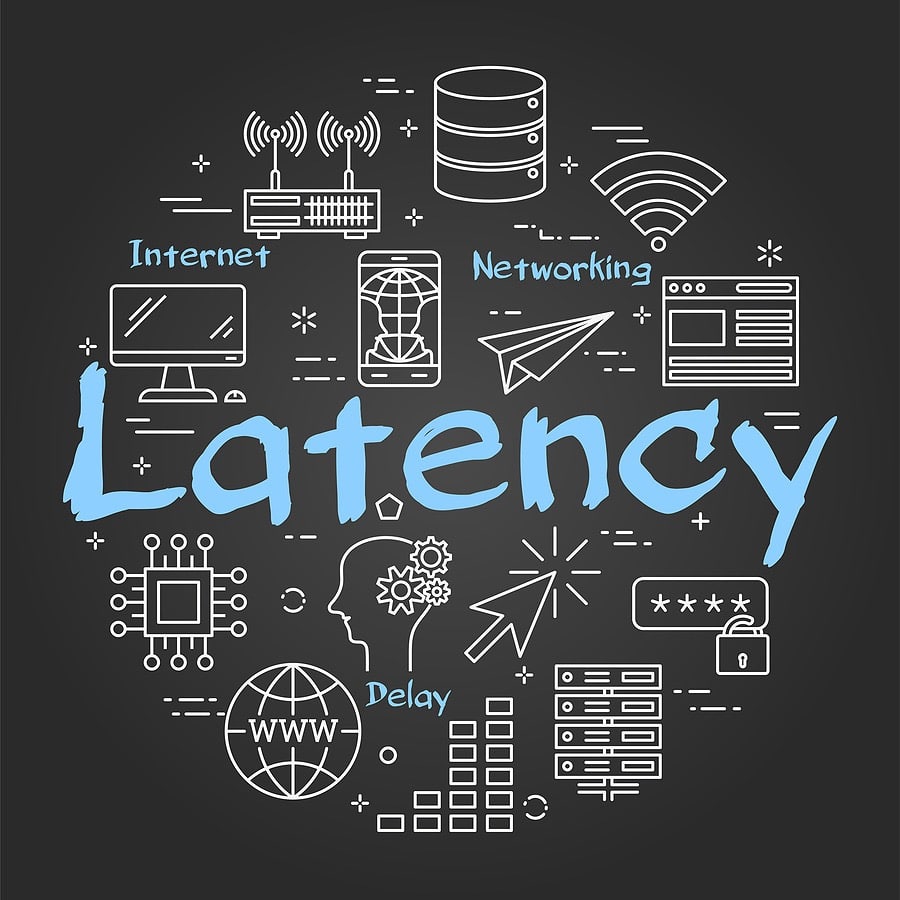

Cross-Shard Operational Complexities And Latency

Two of the most pressing issues when it comes to ETH 2.0 cross-shard communication involve operational complexities and latency. However, Vitalik Buterin has announced two proposals to remedy this and ensure the development of a fully-sharded Ethereum.

These proposals will:

- Funnel several responsibilities and tasks from the individual shards to the Beacon Chain.

- Ensure that each shard has its own state and execution.

- Reduce complexities in the structure of the shard and preserve various network functionalities.

- Give shards enough functionality to support smart contract execution in the various transaction groups.

- Introduce 3 new transaction types: New Execution Script, New Validator and Withdrawal. The New Execution Script will create an execution script that can hold ETH, the New Validator can add new validators to the system, and Withdrawal can remove validators from the Beacon Chain.

Furthermore, another issue that ETH 2.0 seeks to resolve is Network Latency in cross-shard communication. If, for instance, a user wants to send a token from shard X to shard Y, a transaction on shard X destroys the token there but saves a record of the value sent, the address and the destination shard, in this case shard Y.

After some delay, every single shard learns about the state root of other shards which allows them to verify the transaction and confirm that the address of shard Y is valid. Shard X will then produce a transaction receipt that will be recovered by Shard Y, and will allow the value in Shard X to be destroyed and moved onto Shard Y.

Ethereum 2.0 Aspires To Solve ETH's Slow Transaction Throughput And Network Latency - Image via CNSPartners

Ethereum 2.0 Aspires To Solve ETH's Slow Transaction Throughput And Network Latency - Image via CNSPartners As one can imagine, this process causes quite a few delays and takes away completely from ETH 2.0’s scalability objectives. Thus, Ethereum has proposed a solution to this called Fast Cross-Shard Transfers Via Optimistic Receipt Roots. While the title might be misleading, it is actually a rather straight-forward system that entails storing conditional states and being optimistic about the validity of a submitted transaction.

Basically, all this means is that if Bob has 50 tokens on Shard B and Alice sends 20 tokens to Bob from Shard A, but Shard B does not yet know the state of Shard A and therefore cannot fully validate the transfer, the state of Bob’s account (Shard B) will temporarily show 70 tokens if the transfer from Alice is genuine or 50 tokens if it isn’t.

This is because validators who authenticate the transaction from Shard A to Shard B can be optimistically certain of the finality of the transfer and of the fact that Bob’s account will eventually resolve to 70 tokens once the transaction from Alice is validated. Thus, validators can act just as if Bob already has the 70 tokens.

Once the transaction from Shard A to Shard B is verified it becomes permanent, or is simply reverted if it was not valid. This Fast Cross-Shard Transfer system greatly reduces the bottlenecks caused by network latency and, if implemented successfully, will allow Ethereum 2.0 to accelerate its throughput and improve its scalability overall.

Ethereum 2.0 aside, let us now briefly discuss how sharding is implemented in other projects and, more specifically, in Zilliqa, NEAR and Polkadot.

Sharding With Zilliqa

Another project forwarding interesting value propositions and that is also implementing sharding is Zilliqa. Founded in 2017, Zilliqa is a blockchain for computationally intensive use cases for enterprise and emerging tech, with its key functionalities being sharding and parallelised transactional processing.

Next-Gen high throughput blockchain platform.

Next-Gen high throughput blockchain platform. While its network runs on Proof of Work (PoW) algorithms that require heavy computational power, Zilliqa is designed to scale on demand by on-boarding additional ZIL nodes and miners, which allows for more shards to be added to the network.

ZIL is Zilliqa’s native token and, according to its sharding model, if Zilliqa were to have 20,000 nodes its network could be broken down into 25 sub-networks with 800 nodes each that could process data in parallel and simultaneously. Zilliqa’s architecture is intricate as it utilises two blockchains, also in parallel. The project uses transaction blocks, called TX-Blocks, to contain transactions sent by network users and directory service blocks, or DS-Blocks, to contain data on the network miners that secure and support Zilliqa’s infrastructure.

Zilliqa’s blockchain sharding is a two-fold process. Firstly, it elects Directory Service Committee Nodes that initiate the sharding process and assign nodes to each shard. Secondly, once transactions are verified in the shard, they can then be verified by the whole network and enter a global state that annexes every transaction in every shard to a single, verifiable source of truth on the Zilliqa blockchain.

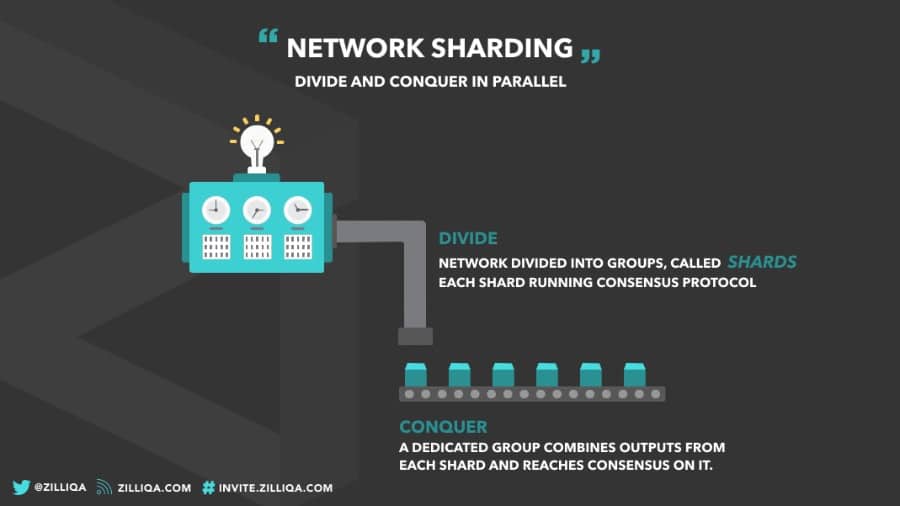

Zilliqa's Divide And Conquer Sharding Model - Image via Undervalued.top

Zilliqa's Divide And Conquer Sharding Model - Image via Undervalued.top In essence, a sharded transaction on the Zilliqa network includes a user launching a transaction which is then transferred to a shard that validates the transaction and compares it with other transactions to create a ‘microblock’ of transactions. Then, a consensus is achieved by the shard on the validity of the microblock which is then assigned to the Directory Service Committee that merges microblocks with the ‘final block’. The DS Committee then achieves ultimate consensus on this block before annexing it to the blockchain.

Sharding With NEAR

A project that implements a rather alternative form of sharding is no other than NEAR Protocol. Launched in 2020, NEAR is a Proof of Stake (PoS) community-governed, sharded blockchain platform with interoperability and scalability at its core.

NEAR leverages its Nightshade technology to achieve massive throughput capabilities that sees validators process transactions in parallel to improve the overall transaction carrying capacities of the blockchain. While the sharding model based on shard chains and a beacon chain is in fact powerful, it does however present some infrastructural complexities due to the fact that both shard chains and the beacon chain are, in essence, separate entities within the same blockchain ecosystem.

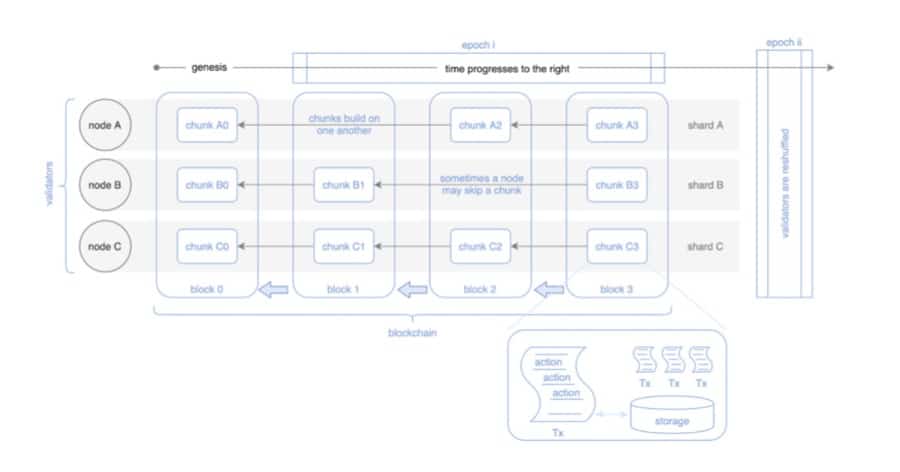

NEAR Protocol Splits All Transactions Into Chunks And Looks To Accumulate Them In One Major Block - Image via LearnNEAR

NEAR Protocol Splits All Transactions Into Chunks And Looks To Accumulate Them In One Major Block - Image via LearnNEAR NEAR Protocol addresses this matter and introduces a compelling sharding design by modelling its infrastructure as a single blockchain, in which each block logically contains all the transactions for all the shards, and changes the whole state of the shards. Through Nightshade, NEAR can preserve its ethos of blockchain singularity by sharding the transactions of each block into physical chunks, ideally with one chunk per shard, and aggregating them into one block.

Sharding With Polkadot

Polkadot utilises a sharding model that differs entirely from the Ethereum-based sharding mechanism and makes use of its cross-chain composability features to activate sharding through parachains. Polkadot’s native design is that of a multi-chain network that provides Layer-0 reliability, security and scalability to all the Layer-1 blockchains built on top of its architecture.

These Layer-1s represent the parachain network, with parachains being the diverse blockchains running in parallel in within the Polkadot ecosystem, on both the Polkadot and Kusama Networks.

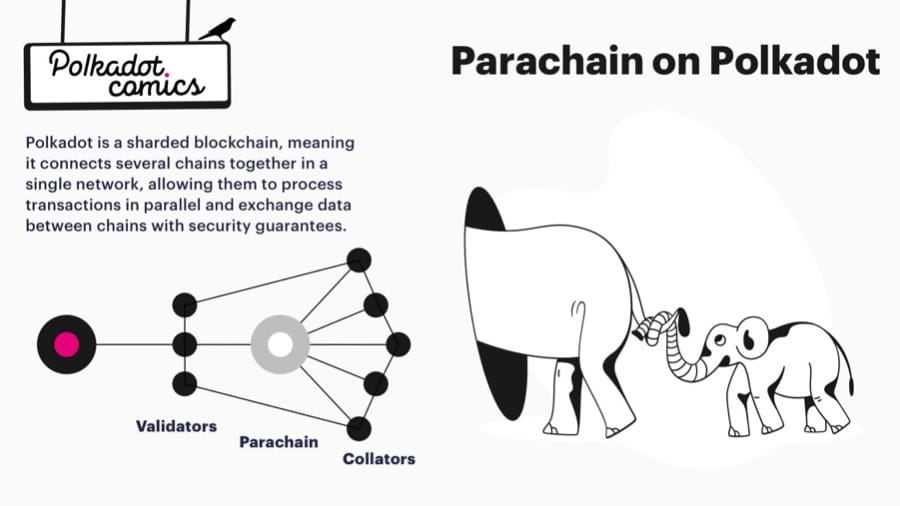

Parachains Allow Polkadot To Process Multiple Parallelised Transactions And Perform Cross-Chain Sharding - Image via PolkadotComics

Parachains Allow Polkadot To Process Multiple Parallelised Transactions And Perform Cross-Chain Sharding - Image via PolkadotComics Parachains are connected to and secure by Polkadot’s Relay Chain and they can benefit form the security, scalability and interoperability provided by Polkadot. The parachain network can indeed be considered an advanced sharding paradigm that implements the features of the Polkadot main chain and operates in a parallelised, shard-like fashion.

Parachain projects such as Clover, for instance, can provide gasless transactions and bring new layers of scalability and interoperability to unsharded Layer-1 infrastructures. This is because Polkadot, as a multi-chain network built on parachains, is able to process multiple parallelised transactions on several chains at once, ultimately embodying the concept of cross-chain sharding.

Conclusion

Sharding is the foundation of scalability in decentralised networks and it offers a variety of exciting opportunities for those projects looking to implement it or at least experiment with its concept.

The issue, at present, is defined by the fact that blockchain networks are growing and expanding at an exponential rate due to their popularity, use case and, ultimately, their demand. In turn, this causes a series of on-chain bottlenecks, complexities and network inefficiencies and is altogether expressive of their dire need for a suitable scaling solution.

Ethereum 2.0 is set to introduce its sharding capabilities in the not so distant future and this represents one of the most anticipated events in the history of the ETH blockchain. Expectations remain incredibly high as indeed, if ETH 2.0’s sharding is successful, it will guarantee the long term scalability of the Ethereum network and will accompany the project in its quest of becoming the decentralised, open to all, impossible to shut down ‘world computer’.